The recent COVID-19 outbreak has had ripple effects across almost every industry. Around the world, the global pandemic has altered the way we live, socialize and even conduct business. In this unprecedented scenario clouded with uncertainty we all, especially digital marketers, are wondering: What needs to change? And how drastically?

When it comes to search engine optimization strategies, digital marketing and content agencies are continually researching the latest trends and evolving best practices. In the current environment, the role of a top digital marketing agency is to keep a pulse on the present, while also looking forward to strategies that will drive long-term success. Here at Bluetext, our digital marketing analysts are harnessing a variety of tactics to support overall business goals and serve users the best we can during these uncertain times. Check out the top ways we’ve been monitoring and optimizing around current events.

Strength in Numbers

When in doubt, trust the data! Using top marketing analytical tools, such as SEMRush and Moz, one can track the aggregate behavior of online users. Gathering the most up to date data can be tricky, so don’t do it alone. The more expertise and tools, the better. Trust a marketing analytics agency to help break down the numbers into a comprehensible story of website traffic. Use professional tools, such as Google Analytics and Google Search Console, to monitor the recent fluctuations in your page traffic. Do a keyword analysis of your current keyword list to see if search volume has shifted. Google Trends page is a great tool to identify emerging patterns. Are there new phrases your customers are searching for? If the language has evolved, so should your SEO strategy. If you have chatbots for customer service transcripts, these can provide valuable insight into current needs.

In short, the data doesn’t lie. Businesses need to understand search traffic shifts to get as clear as possible a picture into whether to pivot your SEO strategy or not.

Content is King — Still

Ultimately any changes to your SEO strategy should be driven by your unique business needs. For example, a brick and mortar store will need to cater to how they can serve customers at home. If your business was already available online, you may be experiencing altered user behavior as people spend more time at home and online. Every business should ask: “Is the content relevant to current needs?” Your messages may need to shift in sensitivity to the current environment. A complete overhaul is not necessary, nor appropriate. However, if there are opportunities to generate new content that supports your users in a unique time, do so. And if your business is considered essential or has been significantly impacted, you should create a dedicated page to capture all relevant coronavirus traffic. Keep the page simple, focused and sensitive. Don’t try to provide the latest breaking news, but exactly what and how your company is doing. If your business has been minimally affected, perhaps there is an opportunity to contribute to emerging conversations. Exploding Topics is a valuable tool for up-to-date trends across search engines and social media mentions. At the end of the day, users are seeking timely and accurate information now and long after the dust has settled on this pandemic.

Optimize Often

Optimize Often

Search engine optimization is never a “one and done” task. Any digital marketing strategy requires upkeep as is the nature of the evolving industry. Now, more than ever, flexibility is paramount to staying afloat. Be proactive, be vigilant. SEO strategy will need re-evaluation in the upcoming weeks and months. No one can predict how long the pandemic will last so you must be ready to pivot to any new or resurging customer needs.

In an unpredictable environment, one thing is certain: this is our new (remote) reality. Don’t expect old strategies to work as they once did, and don’t expect this shift to “blow over soon”. Your business should be prepared to remain relevant now more than ever. There will likely be long term implications in behaviors and business operations. Get behind the shifts now and flex your agility. It will pay off in your long term business health.

If you’re looking to partner with an agency to pivot your SEO strategy, let us know.

The decades-long reign of the PC is over, with mobile devices now making up more than 52% of all internet traffic. While plenty of people preach the importance of responsive website design, far fewer have articulated updated guidelines for the reality of today’s internet. Keenly aware of trends as ever, Google has continually refined its search algorithm to keep pace with increasingly mobile and untethered internet. Advertisers, marketers, and website owners alike need to be aware of what these paradigm shifts are, and how that could impact their sites’ SEO.

Cellphones’ bountiful data has empowered Google to enhance its search engine. Search results are more custom than ever before, incorporating key differentiating factors like time of day, weather, and geography. The search results for a morning bagel in Washington D.C. will look entirely different three hours later in San Francisco.

Optimizing for Local Search

More so than ever before, websites need to be local. Gone are the days of simply tacking on addresses and list of phone lines. To be competitive in 2020, websites need to address the mindset and inquiries of the region they serve, be it a street, coast, or country. A quintessential, doughy foldable New York slice is in stark contrast to a dense, deep-dish pie from Chicago. The top result for a pizza in Manhattan will not be wasting content on merely their cheese, sauce, and pepperoni, but rather what distinguishes their slice from their other New York brethren. Language, context, and local distinctions are now a mandatory part of website content strategy.

Dealing with Short Attention Spans

Major changes to search algorithms are only a handful of the changes introduced by the rise of mobile. Attention spans online are shorter than ever with the ubiquity of the internet and easily accessible information, even more so for mobile where screen size comes at a steep premium. Hero zones should be appropriately leveraged. Heroes should state the most important critical information concisely and contain a quick and simple CTA or takeaway. Organic visitors who cannot immediately find an answer to their search query after a glance and a few swipes will assuredly bounce away to a competitor.

Search and Virtual Assistants

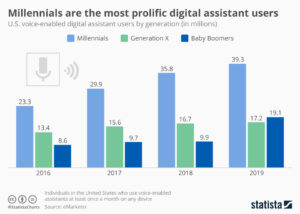

Smartphones’ impact on websites has not just been limited to mobility and smaller screens. Virtual assistants like Amazon’s Alexa, Google Assistant, and Apple’s Siri fundamentally change how people browse the internet. For many on-the-go, the automated search functionality provided by these virtual assistants have all but replaced a typical Google search.

How Google and the other virtual assistants parse through webpages and present them for voice search is a complex topic, but the vital SEO fundamentals remain in place. Research demonstrates that people are unsurprisingly far more conversational in their wording versus a typed-in search. Optimized content thus needs to serve this need directly, often best served using blogs that cover such frequent, informal topics as “What is the best X” or “Y versus Z”.

Google has been increasingly leveraging its structured data for voice search results, largely due to its predictable format and parseable nature. For best results, website owners need to cross-reference website content and identify what data could be passed off to Google using structured data. Articles, menus, locations, events, and reviews are just a handful of the many structured data formats that Google accepts. Conveniently, Google now provides a simple tutorial for anybody familiar with HTML to get started on incorporating structured data and improving their site for voice search.

The shift to mobile devices has opened up new avenues for content creation and design. Location and voice were unheard of topics even a decade ago, but they are here to stay for organic search. It’s up to website owners and marketers whether they take advantage of these new strategies, or get left in the dust.

Content marketing companies consistently stress the importance of SEO. However, the world of SEO can be tricky to navigate, especially since it is not always easy to understand what it is. As an expert content marketing company, Bluetext knows the ins-and-outs and the most effective ways to audit your current SEO process. To help you further understand the importance and relevance of SEO, we’re sharing some important insights. Here are the top 5 things you may not know about SEO:

- Relevancy Matters

Throughout the years, SEO standards have seen many changes. Search engines still reward those who backlink and use keywords, but only to a certain extent. In other words, it is important to stray away from packing keywords and backlinks into web pages, as search engine crawling algorithms can tell the difference between valuable and non-valuable keywords and backlinks. As a way to ensure that the different search engines know your content is valuable, focus hyperlinking to relevant keywords and phrases. For example, linking keywords like ‘article about brand evolution’ is better than linking words like ‘read our article about brand evolution here.’ Other ways to keep your content relevant is by getting rid of broken links and linking to newer resources.

- Update. Update. Update.

One thing many people forget to do is to update older content. For example, as good as it feels to hit submit on a blog post, it shouldn’t be the last time you look at or edit that post. It is crucial to review your older content to know what needs to be updated with new information. Although it may feel like you should solely focus on driving leads to newer campaigns, pages, and posts, investing time into updating older content can be an incredibly helpful way to get users to your pages. Moreover, crawling algorithms favor new content, so updating old content can be a great way to land your work on the first page.

- Images Should Be Optimized

Images are incredibly important to users during their search. However, it can still be very difficult for search engine crawlers to fully make sense of images. Alternative text is a great way to help search engines understand what your image is about. Additionally, when you further optimize your image files, you improve user experience, which, in turn, will decrease your bounce rate and increase average session duration. Here are a few other ways to optimize your photos:

-

- Reduce the size of your image files so the page loads faster

- Include a description that can help the user find what they are looking for

- Add a title to the image to help provide additional information

- Competitor Discovery Is Vital

There’s no doubt you know who your competition is. In fact, you probably keep a pretty close eye on your top competitors to get a sense of what they are doing and how you could improve (at least you should be). But, in today’s age of digital evolution, new brands are constantly popping up and established brands are constantly changing. As a top content marketing company, Bluetext suggests monitoring your top targeted keywords to see whether your competitors have started to rank above you. Conducting a proper competitor audit can help you get a game-plan in place before you lose your position and views.

- SEO Varies Country-To-Country

Having a high ranking webpage can be a tough feat in-and-of-itself. If you do business outside of the US, international SEO deserves separate attention from domestic SEO and adds another layer to the conversation. Although you may rank well on Google.com, you may not rank well on Google.ca or Google.mx. It is important to look at your audience and decide where your attention should be focused.

Even for a top content marketing company, there are still a lot of question marks surrounding SEO since search engines use proprietary and unknown algorithms. Even with these unknowns in the world of SEO, we still do know things that search engines reward. When launching a new campaign or just updating older content, be sure to keep our SEO tips in mind!

Google has done it again, quietly making a significant change to the way its algorithms process Google AdWords that could be significant challenge for digital marketing if not understood and managed. At Bluetext, we closely monitor all of updates to how the Google’s search engines returns query results, and we have posted a number of blogs to let our clients know about these changes and how to address them.

This time, it’s a little different because this change, which Google announced on March 17, addresses AdWords, the tool companies use to implement their keyword purchasing strategies, rather than a revision of its organic search functionality. With this change, marketers may need to adjust their spending programs for purchasing the keywords that drive traffic to their sites.

In the past with AdWords, marketers would select a set of short-tail search terms that would be part of their search advertising mix. For example, a hotel chain might include simple key phrases like “best hotels in Nashville,” mirroring the way customers search for a list of places to stay. Up until the latest change, that exact phrase would drive the Adwords results. But Google has decided that people don’t always type their searches as that exact phrase, dropping the “in” by mistake or even misspelling it as “on.” As a result, Google has decided to expand its close variant matching capabilities to include additional rewording and reordering for exact match keywords.

What does that mean? In layman’s terms, Google will now view what it calls “function words” – that is, prepositions (in, to), conjunctions (for, but), articles (a, the) and similar “connectors” as terms that do not actually impact the “intent” behind the query. Instead, it will ignore these function words in Adwords exact match campaigns so that that the intent of the query will be more important that the precise use of these words.

Sounds like a good move, because if you search for “best hotels in Nashville” or “Nashville best hotels,” the result will be the same in AdWords.

But what if the search is for “flights to Nashville,” which isn’t the same as “flights from Nashville”? Ignoring the function words “to” or “from” would change the purpose of the query. Google says not to worry, its algorithm will recognize the difference and not ignore those words since they do impact the intent.

Hopefully, Google will make good on that promise. But advertisers who have been briefed on this revision aren’t too certain. Their carefully constructed AdWords investments might take a hit if the function words are not managed precisely to meet this new approach.

We like the old adage of “Trust but verify.” While we take Google at its word, we know there are always growing pains with these types of revisions. For our clients, we are recommending that they carefully review the terms they are including in their AdWords mix. Our advice: Be as precise as you can and factor in how these functions words might be perceived before pulling the trigger. Losing traffic to your site because of placement of a simple word should be a real concern.

Want to think more about your adWords, search and SEO strategies. Bluetext can help.

As we have written many times, including on the Google “Hummingbird” release and “Mobilegeddon,” search engine optimization is a never-ending game of cat and mouse. The mouse–anyone with a website that they want to get noticed–is always trying to find shortcuts that make their website come up high in a Google search. The cat–the search engine team at Google–wants to eliminate any shortcuts, work-arounds or downright cheating. That’s the game that has been going on for many years, because doing it the way Google wants, which is by having great content that is fresh, original, updated and linked to by other sites, is hard. In fairness, Google has been doing a great job of reacting and responding to the evolving nature of Internet usage and protecting the integrity of its search engine, and has made it much more difficult to game the system.

So here’s where Penguin 4.0 comes in. One of the ways that Google has assessed where a particular webpage should rank in a search query is by looking at the inbound links on that page. The theory goes that if The New York Times is linking to it, it must have the type of credibility and value that warrants a high ranking. Lesser websites have value, just not as much as The New York Times. However, by making inbound links an important component in the rankings, the mice on the other side found ways to artificially have lots of websites link back to key pages, sometimes by buying links or engaging with networks of link builders. That was the first unintended consequence.

In response, Google launched a Penguin update in April 2012, to better catch sites deemed to be spamming its search results, in particular those doing so by buying links or obtaining them through link networks designed primarily to boost Google rankings. At first, the Penguin algorithm would identify suspicious links when it crawled the Internet, and simply ignore them when it performed its ranking in response to queries. But sometime in the middle of 2012, Google started punishing websites with bad links, not just ignoring them but actually driving page rankings down for offenders. And that set off a mad scramble as sites needed to somehow get “unlinked” from the bad sites. Which led to unintended consequence number two: Not only did webmasters have to worry about being punished for bad links, they also had to worry about rivals purposely inserting bad links to undermine their competitor’s search results. Ugh!

So, in October of 2012, Google tried to fix the problem it created by offering a “Disavow Links” tool that essentially tells the Google crawlers when they find a bad inbound link that the website in question has “disavowed” that bad link, and therefore please don’t punish us for it any longer. Here’s how Searchengineland described the tool at the time: “Google’s link disavowal tool allows publishers to tell Google that they don’t want certain links from external sites to be considered as part of Google’s system of counting links to rank web sites. Some sites want to do this because they’ve purchased links, a violation of Google’s policies, and may suffer a penalty if they can’t get the links removed. Other sites may want to remove links gained from participating in bad link networks or for other reasons.”

And that created yet another unintended consequence, because, unfortunately, the Penguin algorithm wasn’t updated on a regular basis. So for websites trying to clean up their links, as SearchEngineland put it, “Those sites would remain penalized even if they improved and changed until the next time the filter ran, which could take months. The last Penguin update, Penguin 3.0, happened on October 17, 2014. Any sites hit by it have waited nearly two years for the chance to be free.”

Penguin 4.0 addresses that by integrating the Penguin “filter” into the regular crawler sweeps that assess websites on an ongoing basis. Waiting for up to two years for a refresh is now a thing of the past, as suspect pages will now be identified–or freed because they are now clean–on a regular basis.

What does this mean for websites? It’s what we’ve been writing now for a half dozen years. Good SEO doesn’t just happen, and it can’t be manipulated. It takes hard work, an effective strategy, and a long-term view to create the kind of content and links that elevate your brand for your customers, prospects, and the Google search engine. For more tips on navigating Penguin, download our eBook now.

This goes to the heart of every company’s SEO strategy. The clues come in a patent filing for something called an “implied link.” Before I explain why this is important, let’s first take a trip back to the early days of SEO and link-building.

Early on, Google would evaluate where a site ranks for any given search by looking at how many other sites were linking back to that page. If you were a valuable site, visitors would link to you in order to share that with their audience or to cite you as a good resource. That type of analysis would seem like an obvious way to measure the quality of the site.

But SEO gurus are always trying to stay one step ahead, and once link-farms and other shady techniques for creating myriads of back-links became prevalent, Google recognized that there’s no way to verify whether a link was added because a user genuinely likes the content or whether the link was paid for. The quality of a link can be corrupted through a wide variety of Black Hat tricks, and thus the value of all links came into question.

And while Google has updated its algorithms on numerous occasions over the years, that doesn’t mean that links aren’t still valuable for SEO. They are just much less valuable than they once were. Google is now much more selective about the quality of the site that is doing the linking. The New York Times continues to be the gold standard for the most valuable links.

But what if a publication like the Times mentions a brand or its product without a hyperlink? Shouldn’t that carry some weight, even though it doesn’t include a url?

That’s where Google’s patent comes into play. SEO insiders believe that the patent is related to last year’s Panda update, and that it describes a method for analyzing the value of “implied links,” that is, mentions on prominent sites without a link.

Let’s say the Times mentions in an article the website of NewCo as a great resource for a particular topic, but doesn’t include a link to NewCo’s website. Previously, there really wasn’t a measurable way for NewCo to benefit from that quality mention. With implied links, Google sees the mention in the Times article and factors that into its search ranking.

Implied links are also used as a sort of quality control tool for back-links in order to identify those that are most likely the result of Black Hat tricks. For example, if Google sees numerous incoming links from sites of questionable quality, it might search for implied links and find that no one is talking about that brand across the internet. Google looks at that evidence from the implied links to determine if the back-links are real and adjusts the rankings accordingly.

Here are four tips for adapting to Google’s focus on implied links:

• Don’t abandon your link-building strategy. Earned links are still effective when they come from valued sites. The most valuable links will still be for relevant, unique content.

• Brand reputation is key. When asking for mentions on other sites, try to have them use your brand name as much as possible. The same is true when you are posting on other sites. Use your brand name. Do the same in descriptive fields such as bios at the bottom of contributed content.

• Engage your audiences in conversation. Similar to word-of-mouth marketing, the more your brand name is being mentioned, even without links, the more it will benefit your SEO. Encourage that conversation as much as you can.

• Be creative and flexible. Google is always evolving its search engine algorithms. It’s difficult, but not impossible, to predict how they may change over the next year, or how effective today’s best practices will be tomorrow if you know how to follow the clues.

Ever since Google’s last significant evolution of its search engine algorithm, known as Hummingbird, the marketing world has been treading water trying to understand how to drive search traffic to digital campaigns and websites. In the pre-Hummingbird era, search could be gamed by gaining multiple links back to a page and installing keywords throughout the content. So-called “Black Hat” experts charged a lot of money to get around the rules. In today’s Hummingbird era, it’s no longer only about keywords, but rather having good content with a smart keyword strategy that is relevant to the target audience. Attempting to manipulate the system through meaningless links and keyword overload no longer affects the search results.

While the Google algorithm is extremely complex, SEO itself is not complicated. It’s actually very easy to understand. In sum, Google’s algorithms are designed to serve the best, most relevant content to users. That’s the filter that any company or organization needs to use when deciding whether any particular activity that is part of your online strategy will have an impact on your SEO.

It’s just difficult to execute.

For example, if you’re thinking about blasting a request to bloggers to link back to your site, don’t bother. If they haven’t created good content, it won’t make a difference. Want to change the title of every page on your site to your key search term? It won’t work. Thinking about jamming every keyword into a blog post? If it’s not good content, don’t do it.

The challenge is determining the definition of “good content,” at least as far as Google is concerned. That’s where the hard work begins. Good content is not a subjective evaluation, but rather an analysis that the web page or blog post is relevant to users as measured both by the type of content and the extent of its sharing by other influential users. Let’s break those apart and take a closer look.

The merit of the content is important because if Hummingbird detects that it’s crammed full of keywords, or that it is copied from other sites or even within other pages of the same site, it will quickly discount the relevance. The Google algorithm will see right through those types of attempts to create SEO-weighted pages. Good content needs to be original and unique.

More importantly, good content is also a measurement of how much that content is linked to or shared by influential sites and individuals. When you have created a blog post or web page with good, relevant content, it is vital to share this with your intended audience and with influencers that they trust. This is the hard part. There are no shortcuts when it comes to developing good content and in getting it in front of your audience. Nor are there easy ways to identify trusted influencers and get the content in front of them.

For the content itself, the goal is not just to be informative and provide the types of information that the target audience is seeking, although that is important. The goal is also to have that information shared, whether via social media platforms or through other sites and feeds. That means it must be interesting and sometimes even provocative so that the intended audience takes the next step and slips it into its own networks. It should challenge the conventional wisdom, offer valuable and actionable insights and educate the audience with information not already known. And it must be relevant to the audience.

How do you find the right influencers for your audience? That’s not easy, either. At Bluetext, we do in-depth research into who is talking about the topics and issues important to our client campaigns, and then evaluate each of those potential influencers to determine the size and reach of their audience. These can include industry insiders, trade journalists and columnists, government officials, and academic experts.

A solid SEO strategy takes time and patience, and a lot of hard work. It’s not complicated, but it is difficult.

Optimize Often

Optimize Often