The decades-long reign of the PC is over, with mobile devices now making up more than 52% of all internet traffic. While plenty of people preach the importance of responsive website design, far fewer have articulated updated guidelines for the reality of today’s internet. Keenly aware of trends as ever, Google has continually refined its search algorithm to keep pace with increasingly mobile and untethered internet. Advertisers, marketers, and website owners alike need to be aware of what these paradigm shifts are, and how that could impact their sites’ SEO.

Cellphones’ bountiful data has empowered Google to enhance its search engine. Search results are more custom than ever before, incorporating key differentiating factors like time of day, weather, and geography. The search results for a morning bagel in Washington D.C. will look entirely different three hours later in San Francisco.

Optimizing for Local Search

More so than ever before, websites need to be local. Gone are the days of simply tacking on addresses and list of phone lines. To be competitive in 2020, websites need to address the mindset and inquiries of the region they serve, be it a street, coast, or country. A quintessential, doughy foldable New York slice is in stark contrast to a dense, deep-dish pie from Chicago. The top result for a pizza in Manhattan will not be wasting content on merely their cheese, sauce, and pepperoni, but rather what distinguishes their slice from their other New York brethren. Language, context, and local distinctions are now a mandatory part of website content strategy.

Dealing with Short Attention Spans

Major changes to search algorithms are only a handful of the changes introduced by the rise of mobile. Attention spans online are shorter than ever with the ubiquity of the internet and easily accessible information, even more so for mobile where screen size comes at a steep premium. Hero zones should be appropriately leveraged. Heroes should state the most important critical information concisely and contain a quick and simple CTA or takeaway. Organic visitors who cannot immediately find an answer to their search query after a glance and a few swipes will assuredly bounce away to a competitor.

Search and Virtual Assistants

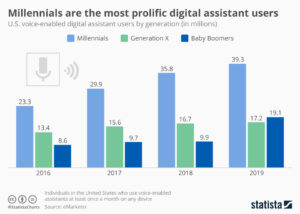

Smartphones’ impact on websites has not just been limited to mobility and smaller screens. Virtual assistants like Amazon’s Alexa, Google Assistant, and Apple’s Siri fundamentally change how people browse the internet. For many on-the-go, the automated search functionality provided by these virtual assistants have all but replaced a typical Google search.

How Google and the other virtual assistants parse through webpages and present them for voice search is a complex topic, but the vital SEO fundamentals remain in place. Research demonstrates that people are unsurprisingly far more conversational in their wording versus a typed-in search. Optimized content thus needs to serve this need directly, often best served using blogs that cover such frequent, informal topics as “What is the best X” or “Y versus Z”.

Google has been increasingly leveraging its structured data for voice search results, largely due to its predictable format and parseable nature. For best results, website owners need to cross-reference website content and identify what data could be passed off to Google using structured data. Articles, menus, locations, events, and reviews are just a handful of the many structured data formats that Google accepts. Conveniently, Google now provides a simple tutorial for anybody familiar with HTML to get started on incorporating structured data and improving their site for voice search.

The shift to mobile devices has opened up new avenues for content creation and design. Location and voice were unheard of topics even a decade ago, but they are here to stay for organic search. It’s up to website owners and marketers whether they take advantage of these new strategies, or get left in the dust.

Programmatic Advertising – No Signs of Slowing Down in 2020

If you spend any time at all surfing the web, you have encountered some form of programmatic advertising. The video ad that plays before you stream your favorite TV show? Programmatic. The banner ad that appears alongside the cooking recipe you’re reading? Programmatic. The sponsored article content that shows up as you’re scanning your favorite news site? You guessed it – it’s programmatic.

Programmatic Advertising – What is it?

Programmatic ad buying is the use of software to purchase digital advertising in real-time, as opposed to the traditional ad buying process that involves RFPs, human negotiations, and manual insertion orders. It allows digital agencies like Bluetext to strategically select where we want to show display, video and native ads, and when.

A huge benefit of programmatic advertising is that the software grants advertisers access to the biggest data providers in the game. Not only are we choosing where and when to show ads online, but we have the capabilities to choose who actually sees our ads. Advertisers are able to hand-select from thousands of audience segments collected by these data providers and layer those segments onto programmatic campaigns.

Why Invest in Programmatic?

While targeting capabilities and ease-of-use are two major benefits of investing in programmatic advertising, there are a number of reasons why digital agencies should hop on the programmatic bandwagon in 2020.

Here are Bluetext’s top 3 reasons for investing in programmatic advertising in 2020:

1. Artificial Intelligence (AI) and Machine Learning

Programmatic advertising already makes digital ad buying easier for advertisers than it ever was before, but AI and machine learning have simplified the process even more. For years, media buyers and digital marketers relied on a manual process to review campaigns, manage budgets, make adjustments to ad creative, and more. However, AI and machine learning have become so sophisticated that the manual days of optimizing are nearly over. Once your campaign has launched, the AI will start to learn what’s working and what’s not, shifting budgets and making adjustments to enhance efficiency in real-time. Studies show that by 2035, AI will boost productivity and profitability by nearly 40%.

As a leading digital marketing agency, Bluetext has seen the benefits of AI and machine learning pay off in major ways. Not only is our digital marketing team more efficient, but the results we were able to drive for clients in 2019 far exceeded their goals. With AI and machine learning only becoming smarter, Bluetext is equipped to reach new heights with programmatic advertising in 2020.

2. Digital Out of Home (DOOH) Opportunities

Digital Out of Home (DOOH) advertising is a unique marketing channel that allows advertisers to reach users outside of their homes in digitally-displayed spaces. Though DOOH media isn’t new, it’s expected to grow exponentially in the coming years. Reports show that between 2016 and 2023, the DOOH media channel will grow from $3.6 Billion to $8.4 Billion.

With the average person spending 70% of their time outside of their home, investing in DOOH in 2020 is a no-brainer. From mobile geofencing to digital billboards, the opportunities to reach people on-the-go are vast. Most importantly, because DOOH is now offered through programmatic platforms, advertisers will have access to significantly more data from DOOH advertising than ever before.

Digital marketing agencies like Bluetext now have the ability to measure results from a digital billboard ad or an animated kiosk through the data and technology programmatic tools provide. Bluetext is looking forward to expanding DOOH capabilities in 2020 to connect the dots from in-home browsing to in-store purchasing.

3. Multi-Channel Advertising

In the past, advertising agencies had to leverage several different platforms, media vendors and direct buys to ensure they reached their audiences across all mediums. Programmatic advertising has simplified this process so that marketing agencies like Bluetext can execute all advertising efforts through one simple-to-use platform.

Programmatic solutions include paid social, display, video pre-roll, native advertising, geofencing, DOOH, connected TV and more. Digital marketing agencies like Bluetext now have the capability to run one digital campaign across all different mediums and – most importantly – track user behavior across all touchpoints. With AI and machine learning, programmatic campaigns will optimize to reach users at the right time on the right medium, whether that’s on the computer, on their smart TV, or in a store on their mobile device. In 2020, it’s expected that even more multi-channel solutions will be announced, such as voice-activated ads powered through devices like Alexa and Siri.

Programmatic advertising has opened the door for digital marketing agencies like Bluetext to effectively run and execute campaigns across a number of channels and mediums, making media dollars more efficient than ever before. With the expansion of programmatic solutions in 2020, there’s no limit to the number of possibilities Bluetext will be able to leverage to drive digital media success for our clients. To learn more about our work with programmatic advertising contact us.

Driving engagement and other key metrics through organic social media is often an important component of a marketing campaign that targets business executives as its target audience. It complements any paid social or media, helps build awareness, and motivates target audiences to click through to a website or other campaign assets.

The question is, how do you determine the best timing in order to get the best results? This is especially tricky, given the short shelf-life of a Tweet, a Facebook post, or a LinkedIn feed. There are many myths regarding when to post organic social to drive the best results for a marketing campaign. Most of them are based on old, out-of-date assumptions, or gut instinct. Bluetext decided to test these to get hard data behind our campaigns.

The Old Common Wisdom on Social

There are some older pieces of conventional wisdom that have become ingrained in practitioners and that date back a dozen or so years to when social media campaigns were relatively new. Here are a couple that seem to make sense, but that we thought might be outdated given today’s “always-on” business culture:

- Don’t post on Mondays or Fridays. On Mondays, people are busy getting ready for the week and are likely to miss the posts. On Fridays, people are leaving early or checking out for the weekend. And never expect them to engage over their busy weekends.

- Avoid first thing in the morning and late in the day. It’s better to try other times when your target market isn’t so busy or trying to clear out of the office to get home.

Why We Wanted to Test Those Assumptions

Ultimately, we weren’t convinced that the older conventional wisdom was still valid. People work more flexible hours now than previously and are on-line and multi-tasking on a regular basis. Here at Bluetext, we wanted to get real data for ourselves so we could make the best recommendations for our clients.

How We Designed the Test

Working with a large client whose target audiences include business executives in the retail space, Bluetext designed a test that would send out social posts across three platforms where the client has a significant presence and following:

We did this over a four-week period, sending out those posts at a different time of day each week. For Facebook and LinkedIn, we also send out posts on different days of the week to see if and how that might make a difference. We looked at re-posts, replies, likes and link clicks.

The Results

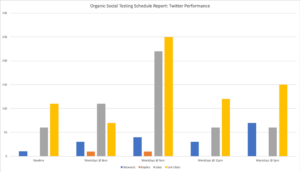

Contrary to the conventional wisdom, the best results for the test’s Tweets were for those posted at 9:00 am and pm weekdays, outperforming those sent at 8:00 am, noon, or mid-afternoon.

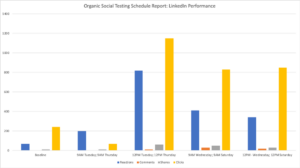

The best results for LinkedIn were for those posted at noon on Tuesdays and Thursdays but other positive results for 9:00 am on Wednesdays and Saturdays.

For Facebook, the best results came at noon on Tuesdays, Wednesdays, Thursdays & Saturdays.

How to Leverage This New Data

Focus social posts around those best times and dates for each platform, but don’t ignore the other times or days of the week. Although posting content during “off-hours” might not deliver as much engagement, they will help to build awareness.

As marketing professionals improve at optimizing their advertising campaigns around personalized messaging and graphics for target audiences, a “Time Machine” strategy of targeting prospects will become more prominent in 2019.

What’s a Time Machine for marketing? It’s a new approach to targeting the best prospects based on what they might have done – or attended – in the past.

Tracking behavior via AI and advanced analytics of individuals who have attended an event in the past and targeting them for digital outreach offers a new avenue of personalized digital marketing. In one recent example, Bluetext identified everyone who has gone to see a sports team over the past year, with the intent of serving up ads for with offers to buy season tickets or branded merchandise. Only recently have the data analytics and AI been available that could identify who attended a game over the past year. That’s why we call it “Time Machine” technology.

Yet, Time Machine strategies don’t stop at just attendance. Personalization also means setting up a whole system of user personas, messages for each persona, and specific creative that will appeal to them. Then, if prospect (A) performs action (B), they receive item (C); while prospect (X) performing acting (B) will result in them receiving item (Z). So while two individual prospects may have both gone to the same game, getting them to buy the tickets for next season may require different messages to push them over the finish line. Tracking behavior via artificial intelligence and targeting audiences for digital outreach is just one of the ways that a “Time Machine” strategy leverages deep data as a new avenue of personalized digital marketing.

Personalization is powerful because it solves the problem of relevance. Traditionally, ads were targeted at mass audiences, meaning that the vast majority of impressions were useless because they were not relevant to the viewer. A Time Machine strategy, paired with a robust personalization system, means that you can deliver more relevant ads and, in turn, have a better chance of converting more prospects.

At the end of the day, a Time Machine strategy is all about increasing relevance by matching an individual’s past behavior with a message and/or creative that compels them to repeat that behavior. Your brand’s value proposition, communicated in the prospect’s language, matching their expectations, and addressing their unique needs and desires, will be more meaningful with a Time Machine strategy.

Learn how Bluetext can help you make the most of the top marketing trends for 2019.

Dynamic content may be the next big thing for digital marketers. In the marketing world, as personalization becomes more in demand, the power of artificial intelligence and data analytics to deliver personalization through dynamic content generation is taking on a larger role. That artificial intelligence is taking the guesswork out of marketing. No longer do digital marketers need to “hope” that, for example, a blue-themed campaign landing page with lots of large images or a certain type of blog post will appeal to all audiences. Artificial intelligence can provide real insights on messaging, graphics, color schemes, and even the types of content that marketers push out to target audiences.

This evolution may be closer than you think. In fact, according to eMarketer, forty percent of marketers in a recent survey responded that they were currently using AI to fuel their dynamic creative. That number was consistent with audience targeting and segmentation, creating a clear path to real personalization. This tactic has given advertisers the unparalleled ability to know their audience through identification, segmentation, and targeting.

Because various types of testing can be done now thanks to AI, marketers are able to find the optimal way to target a particular consumer. This includes elements such as colors, fonts, call to actions and images. Targeted advertisements not only serve a consumer the product they were looking at in the days or weeks before but in a way that would be most appealing to him or her, increasing the likelihood of engagement.

Beyond customizing the content that users are served, consumers are increasingly interested in curating their online experiences as well. That helps explain the endless number of social media platforms available to us.

We recently worked with the cognac company, Courvoisier, to launch their Honor Your Code campaign microsite which truly defines what we mean by “user-generated content.” The site encourages users to create a meme by uploading a photo, adding their hometown, and choosing a “code” that they respect and honor. Memes are then shared to social media, and ingested into the CMS by an Instagram API to display in a scrolling mosaic grid. Users are also able to explore the “Heatmap” by clicking on cities across the country to view other codes being shared and possibly even their own.

The Honor Your Code site on mobile provides for a unique user journey with more of a “native social” experience. Videos and Instagram posts display in a scrolling, digestible way users have become accustomed to. The site also features age authentication and a product locator.

It’s no question that people love personalized content. According to Janrain, 74 percent of online consumers get frustrated when content on the websites they visit has nothing to do with their interests. It’s clear that generating dynamic content for your audience is vital to a brand nowadays, so why not take a deeper dive into each individual’s profile to better understand what will work with that specific target audience? It is much more effective to serve content types and styles that the individual responds to instead of a blanket assumption of one-size-fits-all.

Learn how Bluetext can help you make the most of the top marketing trends for 2019.

Our association clients often ask us what our process is for developing digital campaigns that will deliver the best results, whether that’s awareness for their brand or attendance for their conferences and events. Top marketing firms understand that a strong, repeatable process is key to getting the right messages and creative to the right audiences at the right time. They also know that analyzing results on a continual basis is the only way to optimize performance by revising and adjusting when needed.

At Bluetext, we take a disciplined approach to every campaign, and that begins with discovery. We start by asking three questions:

- What is the goal of the campaign?

- Who are the target audiences?

- What do you want them to do?

These might sound like simple questions, but you might be surprised at the discussion that follows within the association world to get to an agreement on each of those. Top marketing firms like Bluetext know that we have to act as both a facilitator and as a honest broker who can push each stakeholder to reach that agreement. The reason is that at most membership and trade associations, there are different “clients” who have different goals for each campaign. In some cases, it might be membership renewal, while for others in the same organization it might be registration for an event that drives revenue. For others, it might be awareness of the services that the association provides.

But it’s not until that decision is made that we can move on to the next question: Who are you targeting? Again, that might sound simple, but we’ve witnessed our share of knock-down, dragged-out fights inside organizations where stakeholders have a different opinion of the audience. In some cases, part of the client team might be focused on entry-level IT professionals for their particular association, while their colleagues might believe that the true target is the mid-level professional seeking to move up in their career.

But the most difficult question seems to always come down to, What do you want them to do? The easiest response might be to do something that drives revenue, whether it is to become a member of the organization, or to renew their membership. It might also be to attend an event or purchase a service. But it might also be simple awareness of the value of the trade association and the services it brings to its members.

For each of these possible answers, there might be a multi-step process to take the action. We don’t expect, for example, a new prospect to commit to attending an annual conference just because they receive an email or see a banner ad or paid social post. It may take a sophisticated email “drip” campaign that methodically delivers different types of information that drives them down through the sales funnel before they click on a landing-page for registration. Each step of the process requires clear messaging and strong creative. And each step requires effective analytics to measure performance of the subject line (for an email) or headline (for a banner ad). We are always A/B testing subject and headlines to see which are performing the best so we can adjust accordingly.

Top marketing firms will provide detailed methodologies and analytic tools before the campaign is launched so that organizations understand exactly how the campaign will run and achieve the desired results.

Learn How Bluetext Can Help Design and Execute Your Next Digital Campaign.

Marketing analytics may seem like a dry topic, but there’s an old adage in marketing communications: If you can’t measure it, you can’t manage it. With the advent of digital marketing, online outreach, marketing automation, and digital media, this is more important now than ever. In the digital world, measuring is both easier and more difficult, but it underpins every successful campaign.

Why is it so difficult – yet so critical – to have the right analytics? Let’s take a look at four key marketing channels one-at-a-time to understand why.

Media campaigns. In the analog days of print advertising, brands could see their advertising at work, in the pages of the publications that their industry would subscribe to and read from cover-to-cover. The circulation of publications was certified by outside organizations, so calculating the reach was a matter of doing the math. What was missing was a measurement of who saw the ad and how they reacted or engaged with the brand.

In today’s world of digital advertising, campaigns are sophisticated and programmatic, meaning they don’t go to every visitor to a publication’s website, but rather only to those whose characteristics match the target audience. We take it as an article of faith that our media partners are delivering the ads as intended. The only real way to measure success is by looking at the analytics of who clicked on the ad, and what action they took once they got to that landing page. That’s why the analytics are more crucial than ever.

Email outreach. Not too long ago, direct mail was a key element in most marketing campaigns. Today, that has been largely supplanted by email marketing. Besides the significant cost savings of email versus direct mail, it’s can also be much more targeted with a good database to start from. Adding the right marketing analytics to the emails can assure that we know who has received the email, who has opened it, and who has clicked through to the landing page.

For our clients, we often will test different subject lines to see which results in the most engagement. That’s a key part of our analytics. We will also develop two compelling subject lines, and send the same email with the second subject line to any of the recipients who didn’t open it the first time. For those who never open the email, we may take them off the list after five or six emails, or keep them on the list to remain top-of-mind for when they might be in the ready-to-buy.

Social media. It’s easy to judge the effectiveness of social media by the number of followers on each platform. That would be a mistake. Followers don’t always translate into engaged audiences or influencers. It’s too easy to be fooled by “bought” followers or people who automatically follow everyone as a way to build their own profile. More important is the influence of key followers, the ones that are known as thought leaders for that market and have their own brand and a substantial following. We would rather have a limited number of these type of individuals who have an interest in and will engage with our clients’ brands, than a large number of followers unrelated to the business. Analytics tools that measure this type of impact are key.

Traditional public relations. In the pre-internet days, we could measure the impact of a pr campaign much like we did advertising: add up the number of subscribers for each publication that runs an article for a total reach. Again, that tells us little about the impact that article might have. In today’s digital age, we are more interested in strategic coverage that focuses on our clients or positions them as thought leaders – either through authored bylines or being quoted for their insight – than the mere number of hits.

Successful digital marketers are constantly evaluating where to put their resources, and how to measure the programs they are funding in terms of lead generation and sales. Advanced marketing analytics allow companies to go far beyond baseline metrics, by providing the tools to really understand how their target buyers are consuming content, what entices them to engage and interact, and what triggers a conversion. In-depth analytics -including multivariant as well as A/B testing – provide the types of information that enable more automation and personalization to map to each buyer’s journey. Getting that data in real-time from the right analytics and tools will offer the most current insights for reacting quickly and putting the best content in front of that audience, responding to what’s happening now, not what took place a week or month earlier.

Our recommendation is to invest in marketing analytics that will provide real-time, data-driven insights to meet your marketing and revenue goals.

Learn how Bluetext can help develop marketing analytics that help your brand meet its marketing goals.

Google recently updated its ad muting tools to give users even more controls over ads that auto-play in your feed. In its blog post announcing this capability, Google reiterated its commitment to transparency and control over a user’s own data. The new tool allows users to go into their Google Ad settings and select which ads that are targeted at each user, and select them for a sound-off setting. In addition, it will engage ad muting across all of your devices.

Sounds great for users, but what about for marketers who are trying to get their ads in front of potential customers who have expressed interest in the product or service? After all, retargeting potential customers who may be solid prospects due to the interest they’ve expressed can be a successful arrow in a marketer’s quiver, while ad muting may seem like a killer.

The immediate reaction in the marketing world was that the sky was falling with the new Google ad muting tool. We think that’s an over-reaction. In fact, we are strong believers that the more that target customers believe that the ads they are seeing are appropriate and of interest – and not annoying, irrelevant, and out-of-date – the more likely they will have the confidence to engage with the ads.

Here are our four tips for making sure you online targeting will be successful, and not fall on deaf ears:

- Don’t use auto-play in your retargeting campaigns. This might seem obvious, but there’s a reason that Google upgraded its ad settings for users: They keep complaining about them. Yes, they do force viewers to react, but that’s not always a good thing. If a target audience wants to engage, they will do it because of the content, and not because of auto-play.

- Limit your retargeting for each user. One of the complaints about auto-play in response to Google’s announcement is that these ads often target users for months, even though the interest may have vanished after days. For our retargeting campaigns, we recommend no more than six ads. If the target customer hasn’t engaged at that point, we don’t believe additional placements will help.

- Make sure your ads have great creative. This should also be obvious. Target customers are far more likely to notice and react to an ad that gets their attention in a good way. That means professional creative with a message that says something to your targets. It doesn’t always have to be humorous or outrageous to get their notice. But it does need to be good.

- Make it count. Being relevant and timely is what users really want. That means paying attention to when the user expressed interest and acting quickly before they move on to another solution.

Looking to make your digital media campaigns more effective? Learn how Bluetext can help.

Successful digital campaigns need to connect to its audience while simultaneously getting the company’s message across. Digital marketers spend a huge amount of time analyzing their target market and audience before building a campaign and crafting an implementation strategy for seamless execution. Here are five tips to help your company create a successful digital campaign.

- Know your personas. Personas are fictional characters representing a company’s potential customers. Each persona has its own role, goals, challenges, company, job, skills, preferences, and so forth. Understanding your personas and building a detailed profile for each is a key step in creating an effective digital campaign.

- Analyze your competitors. Keep an eye on the public-facing marketing efforts of your competitors to understand how they are targeting their consumers. By gaining a better understanding of your competition, it provides insight to how you should position yourself in the market to stay ahead of the competition.

- Optimize your SEO. Understand the keywords your personas are searching for on search engines and integrate those keywords in your digital campaign’s SEO strategy. Optimize the meta data of your campaign by integrating your target keywords in your campaign’s title, content, meta description, URL, and image alt text.

- Set an offer strategy. Once your digital campaign has successfully captured a consumer’s attention, you need an offer strategy to draw them in. A common approach is through the promotion of gated premium content. Understanding the content that appeals to each of your personas will direct the premium content offer that should be tailored for each. A complete profile for each persona will guide a company’s content creation and fill any gaps in its content offerings.

- Create a lead strategy. Although generating leads is the goal of a digital campaign, it is not the end goal. An internal strategy needs to be in place to continuously inform and engage a lead, whether through email or other mediums, with the end goal of transition a lead to an eventual customer.

A successful digital campaign requires a significant amount of planning before it can be built, tested, and implemented. Developing an adept understand of the market environment alongside a solid SEO and content strategy are the key factors to launching a successful digital campaign.

Looking for best in class digital marketing? Contact us.

In the arena of top marketing firms, data-driven marketing seems like the key buzzword of the past few years. In fact, it’s no passing fade. Leveraging analytics to reach target customers has become a key component of any successful digital campaign. According to a recent survey by the Global Alliance of Data-Driven Marketing Associations, employing a data-driven approach has become the backbone to just about any campaign or messaging—whether it’s targeting the right audience, or even predicting potential success. And as marketing technology continues to make inroads across the industry, it’s should not be surprising that more businesses want to take a data-driven approach to their marketing.

The use of data to improve the effectiveness of marketing, and to measure it, is virtually universal these days. In fact, a recent study found that the number of marketers who still don’t use data are now just one in 10. In addition, even more complex data techniques, such as integration of third-party data and cross-channel measurement, were found to be widely used.

The survey found that more than nearly 80 percent of advertising and marketing professionals are now using data-driven techniques to maintain customer databases, measure campaign results across multiple marketing channels, and segment their data for proper targeting. Again, for those of at the top digital marketing agencies, this finding makes perfect sense. Marketing automation platforms, when configured properly, can easily deliver this type of feedback, and those platforms have been aggressively showcasing these capabilities.

The survey, which targeted both advertising and marketing executives from a wide variety of industries, notes a clear shift in spending patterns. The survey respondents reported a strong expectation that spending on data-driven efforts would continue to rise. Nearly two-thirds of the respondents said their spending on data analytics for marketing would rise, while only seven percent said they expected a decline in spending the coming year.

While data-driven marketing is not quite yet a flawless solution, that’s not unexpected for a relatively new approach that relies on new technologies, data-driven marketing isn’t a perfect solution, at least not yet. A recent analysis from Square Root found a challenge for many companies is gathering the type of high-quality data that is necessary to optimize results. Data analysts are searching to find more effective ways to collect, manage and understand data. Forty-four percent of the survey respondents reported that they were still using outdated tools. A similar amount believed they could make decisions without in-depth data. According to the survey, over a quarter of respondents cited other time wasters from data source overkill to bad numbers. Square Root’s study, in particular, found more than half of data professionals felt they could use better training, closely followed by another 49% who desired more user-friendly or updated data tools.

But chief marketing officers and other executives wouldn’t be making these investments if they didn’t think they deliver results. They recognize the benefits to the bottom line.