Marketing, unfortunately, isn’t an exact science. Nor is there ever a “one size fits all” solution to business objectives. There are hundreds of best practices when it comes to marketing, but sometimes those best practices aren’t the best fit for the goals you’re looking to achieve. To make sure you’re driving the strongest results, you need to test! You can’t optimize for the best results for what you don’t test.

At Bluetext, we are big advocates of A/B testing; we use the tactic across different marketing initiatives – from landing pages, email templates, call-to-actions, banner ads, and everything in between. And by hiring a marketing analytics agency, you can eliminate the guesswork around what tactics are the best fit for your business needs.

What is A/B Testing?

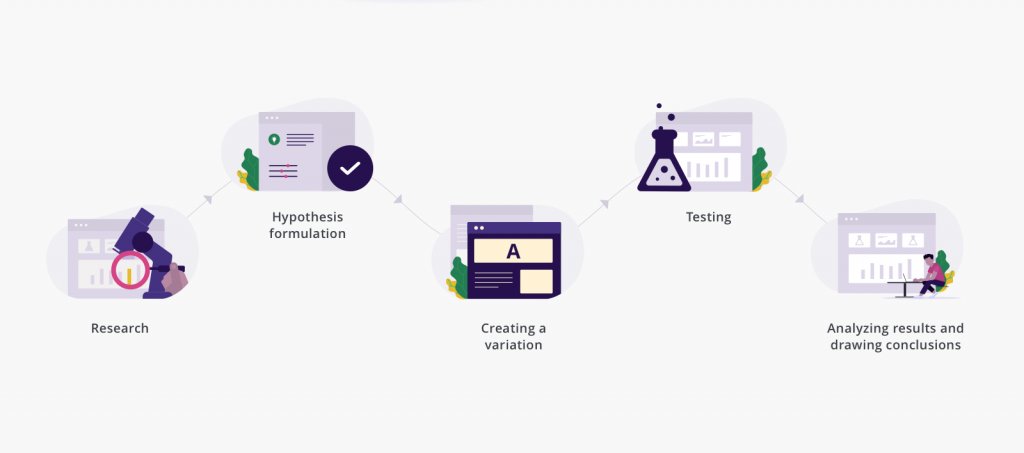

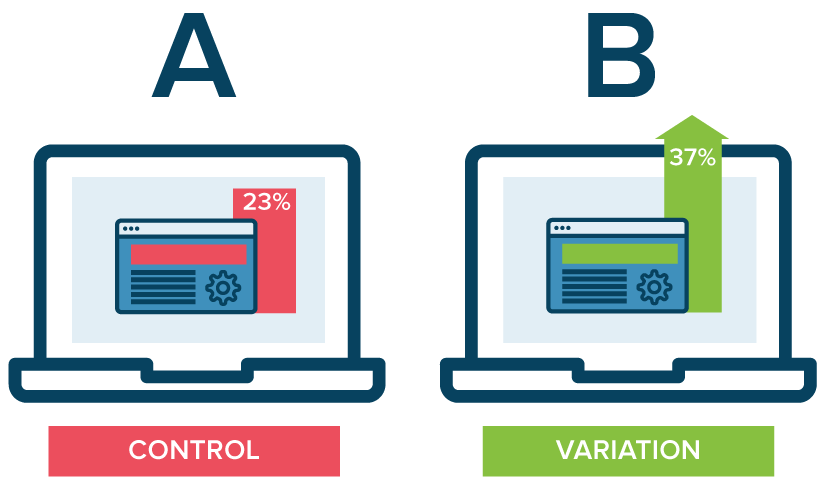

A/B Testing (also known as split testing) is a simple and effective tactic to use when you want to understand your audience better and improve overall campaign performance. A/B testing is an experiment where two (or more) variants of a specific asset are shown to users at random, and statistics help to determine which variant performs better for achieving your ultimate goal. Users start off with a control asset, and then run a variant alongside the control, measuring results accordingly. Sound familiar? It’s the exact same concept of scientific method you learned in primary school! The only difference is you can turn these stats into real business revenue, not a lab report.

A/B testing takes the assumptions and the ‘best practices’ out of the equation. Marketing, as we mentioned, isn’t an exact science; we don’t know what we don’t know! A/B testing relies on statistical data to help marketers understand and verify what resonates best with their audience.

How A/B Testing Works

A/B testing can be executed in several ways. If you’re running a display ad campaign and want to test animated ads vs. static ads, then perhaps you don’t necessarily have a ‘control.’ You launch both variants at the same time, and once you collect enough data for the test to be statistically significant, you pause the under-performing ad variant.

Alternatively, you may want to improve your landing page conversion rates. In this case, you take your current landing page environment – the control – and change one asset on the landing page; this page becomes your variant. The popular heatmapping landing page tool, Crazy Egg, notes that A/B testing landing pages is useful because it “lets you see what elements really click with your audience and which don’t. Changing just one word in your CTA can reveal nuances about your target audience that you never would have otherwise discovered.”

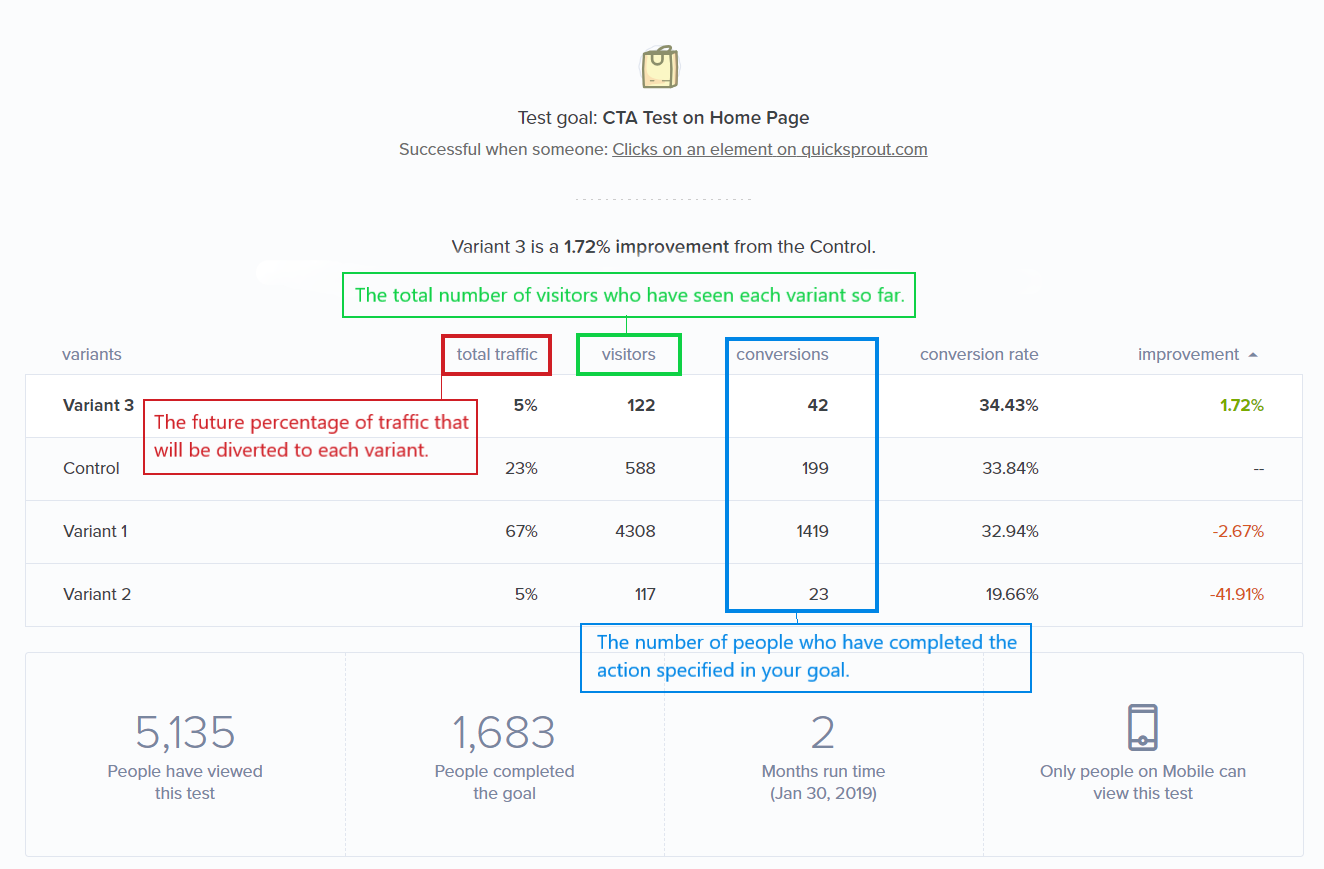

After your test has reached statistical significance, you have the tools needed to determine whether you should continue to keep your control landing page live, or if you should redirect all traffic to your new landing page.

The most beneficial aspect of A/B testing is that you will receive results: you will end an A/B test with a clear winner, and insights into what resonates more with your audience, arming you with the tools you need to improve your marketing performance.

You Have A Winner! Now What?

Once your test has run its course by reaching statistical significance, and you have a clear winner, what’s next?

Implementation to start! Don’t waste any more time – or money – driving users to a lower-converting landing page, or showing underperforming ads to your audience. Take the winner of your A/B test, apply it, and start to see your KPIs skyrocket.

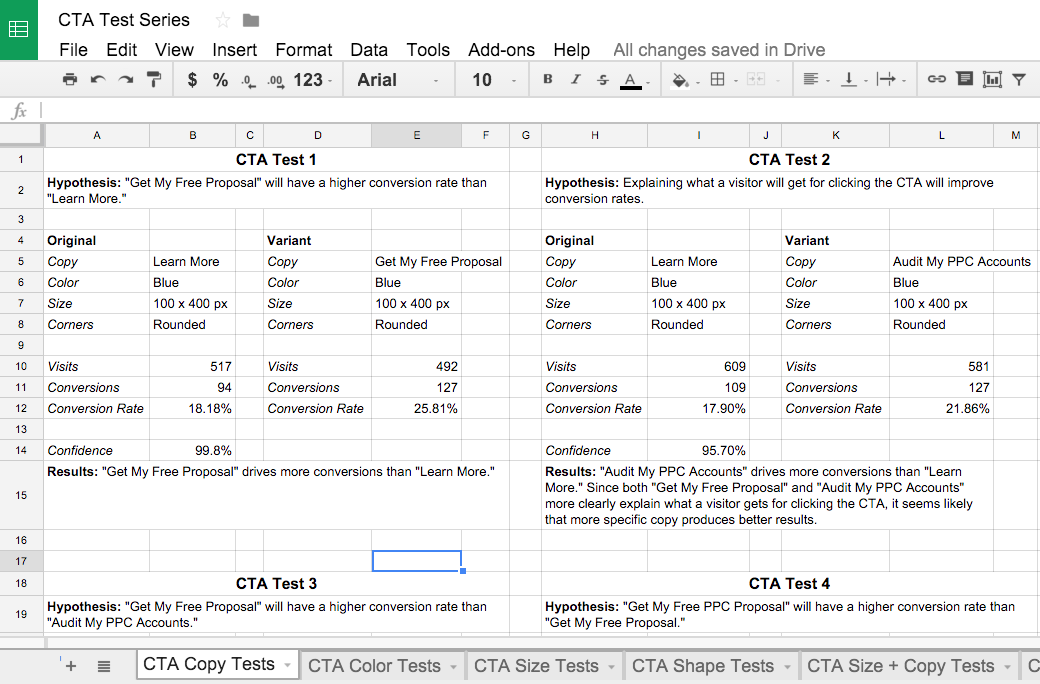

Of course, testing is a marathon, not a sprint. Just because you tested one asset, there are still hundreds – thousands – of other tests you could (and should) run. A/B testing should be an ongoing tactic for your marketing efforts, not a one-and-done completion. Remember: you can’t optimize what you don’t test.

When determining what your next A/B test should be, make sure you’ve analyzed your most recent test, and understand why the winner actually won. What did you learn? Did a new call-to-action boost conversion rate? What did you change in your CTA, and why do you think that minor change helped to increase your conversion rate? If the CTA on that landing page helped to increase conversions, where else could you apply this change?

It’s critical to take time to ask yourself these small questions to help understand the bigger picture. Chances are, you will come away from your latest test analysis understanding your target audience even better, and will have an idea for your next big A/B test.

One thing we can’t stress enough: document your A/B tests! Document the setup, the control and variant, the winner, your analysis – everything. Save it in a safe place. As Medium states, documenting your tests “leads to deeper understanding and context. In turn, that understanding sparks new ideas causes stronger hypotheses, and prevents running similar tests twice.” You will absolutely want to reference previous A/B test documents before jumping into the next!

Now that you have an idea of what A/B testing is, how to approach it, and how to expand on your learnings – what will be your next (or first!) A/B test? Learn how Bluetext has helped clients achieve stronger KPIs and boost conversion rates through strategic A/B testing on our site.